Supercharging Data Science | Using GPU for Lightning-Fast Numpy, Pandas, Sklearn, and Scipy | by Ahmad Anis | Red Buffer | Medium

Python Pandas Tutorial – Beginner's Guide to GPU Accelerated DataFrames for Pandas Users | NVIDIA Technical Blog

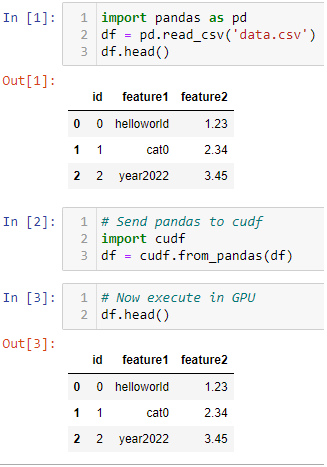

Gilberto Titericz Jr on X: "Want to speedup Pandas DataFrame operations? Let me share one of my Kaggle tricks for fast experimentation. Just convert it to cudf and execute it in GPU

An Introduction to GPU DataFrames for Pandas Users - Data Science of the Day - NVIDIA Developer Forums

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Python Pandas Tutorial – Beginner's Guide to GPU Accelerated DataFrames for Pandas Users | NVIDIA Technical Blog